Grow is a Vive game about plants that think they are machines.

When working with VR, most of the old design conventions for screen based applications go out of the window. Normal interaction and feedback methods simply don't work, so you have to design less for someone using a computer, and more for a person standing in a room, interacting directly with whatever it is you are having them interact with. In some ways this makes things easier, in others it makes things harder, but the core requirement remains the same; to create clear and intuitive methods of interaction with your application. Grow was an experiment in that.

I wanted to create a game about synergies, about an ecosystem, or about machines. Something that met halfway between puzzling and exploratory, that rewarded the player for figuring things out. No manuals, tutorials, or text, the player would learn everything through play. At first, the player has a single plant and a watering can. Most players will do the obvious thing and water it, and see that doing so causes it to bear fruit.

If you want to play it "as intended", without spoilers, skip to the bottom and download the game from there. I'll wait.

Every plant in Grow has a function. It requires something, and produces something. The first plant, the Goodnut plant, requires water and produces nuts. The second plant, the Spigot, eats nuts and produces water. The two lean on each other, and discovering this relationship is key to understanding how the plants work. Of course, a player can just plant a pretty garden if they like, but they are likely to stumble across the interactions eventually.

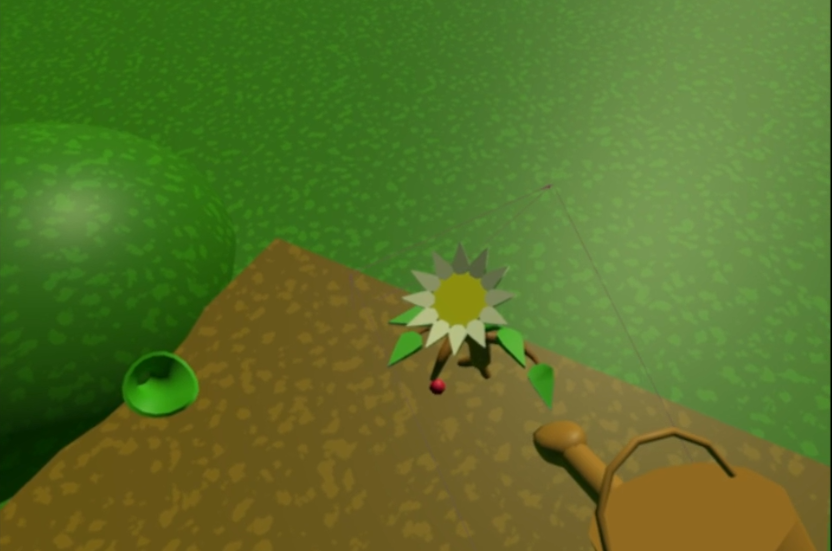

Feedback on how these work needs to be very clear. Plants that need nuts have mouths, and plants that need water have flowers that bloom when they are watered. You can see both of these effects in the example above, and savvy players might notice that placing them close enough together will keep the area watered indefinitely. What do do with all this water?

Make a factory.

In Grow, players are only limited by the size of the garden. As long as they can fit the plants in, they can have as many as they want. Plants can only be planted on the soil, but can be moved about and re-positioned as needed. Here we see a line of Goodnuts being planted on either side of a Shufflebush. The Shufflebush works like a conveyor belt, requiring water and pushing items along. It is shaped like a half pipe, and can be placed anywhere, even in midair, which opens up some interesting opportunities.

Later plants introduce different resources, such as syrup. Syrup cannot be moved by hand, so players will need to figure out a suitable way to get it where they need it.

Players can create simple, efficient gardens, crazy Rube-Goldberg machines, or anything in between. I tried to create a sense of wonder and discovery in finding out how things work, and while there is a "goal", it is left deliberately vague; if a player is having fun, I don't want to get in the way with my silly rules.

Grow could be potential expanded with more plants, more plots to work with, and more areas to explore. Getting the player and resources from one plot to another is a particularly interesting challenge. There are a lot of tweaks that could make it better, such as a better, physics enabled pickup system, so I am likely to keep updating it.

EDIT: Here is a newer, better demo, shown as vrLAB in Brighon. https://drive.google.com/open?id=0BzvAo5Z23YJSZHl6YWp6Y0FxVWc

You can download the old prototype here. The menu button opens and closes your plantsack, and the trigger grabs. You can quit the game by pressing Esc.